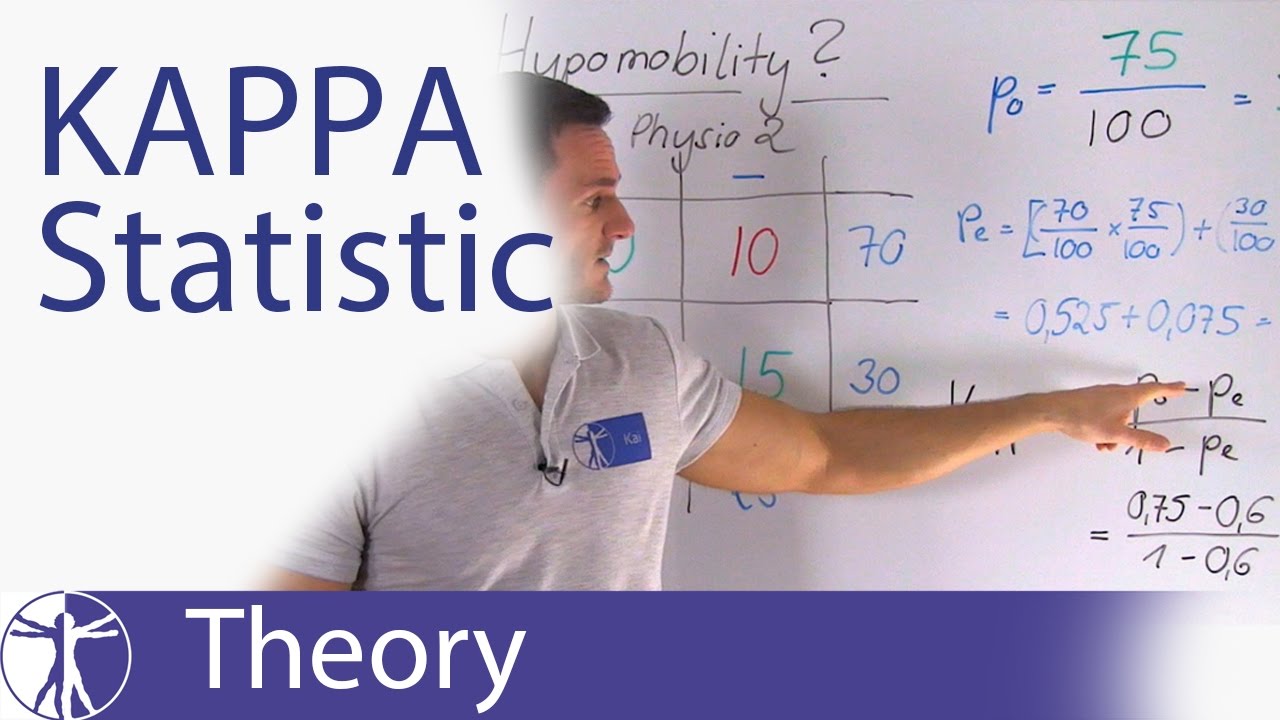

Interrater Reliability of the Postoperative Epidural Fibrosis Classification: A Histopathologic Study in the Rat Model

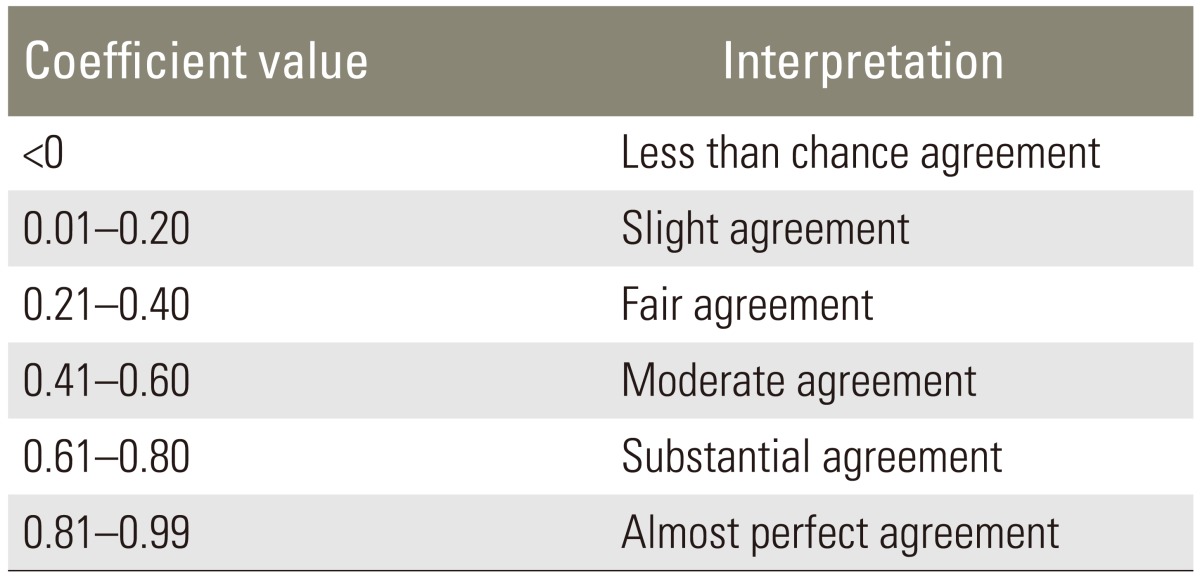

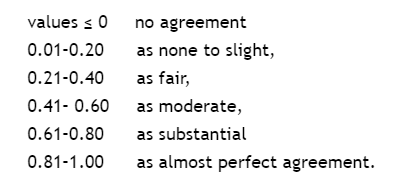

File:Comparison of rubrics for evaluating inter-rater kappa (and intra-class correlation) coefficients.png - Wikimedia Commons

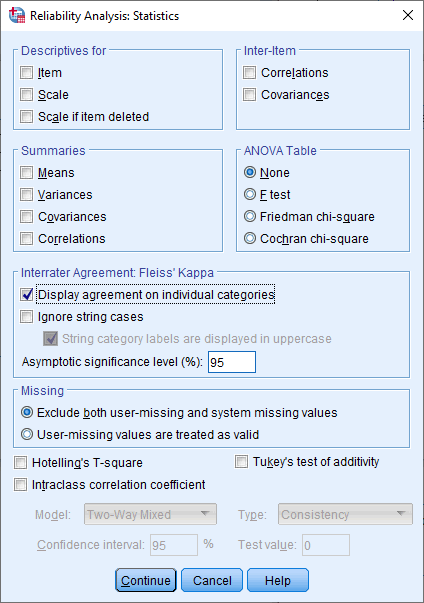

SUGI 24: Measurement of Interater Agreement: A SAS/IML(r) Macro Kappa Procedure for Handling Incomplete Data

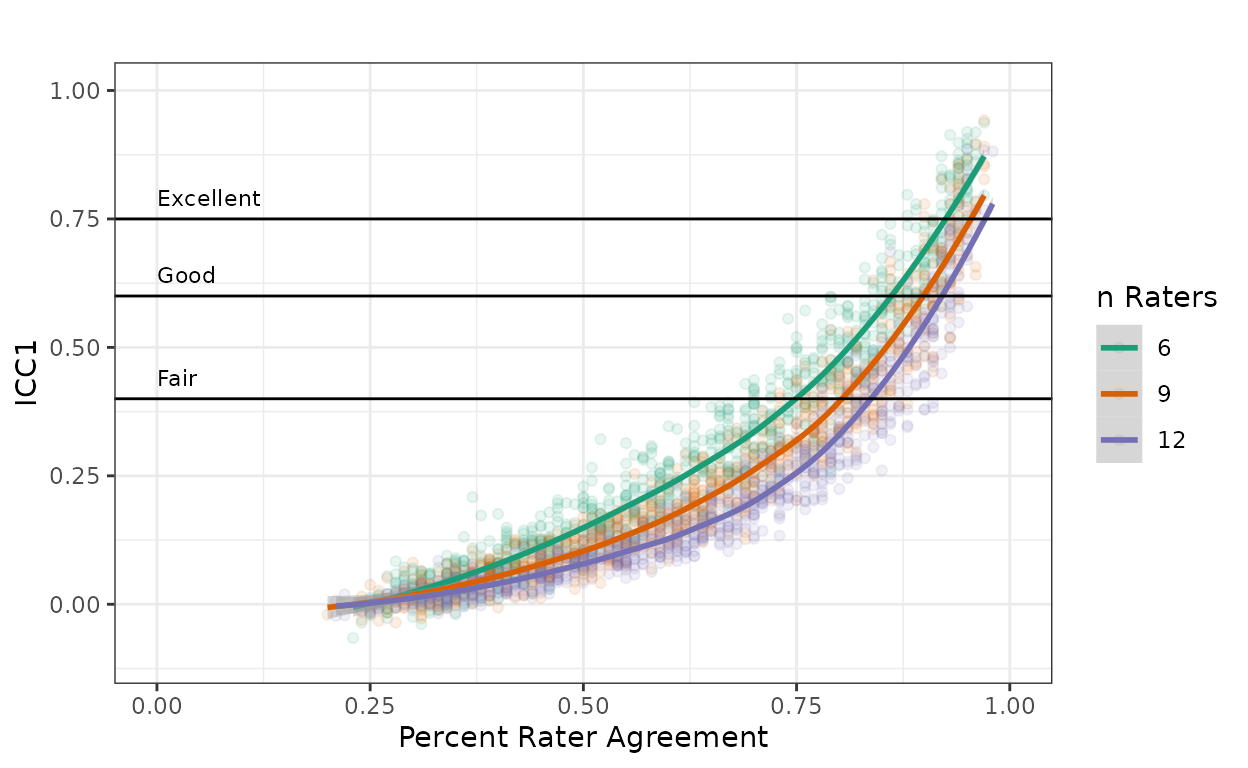

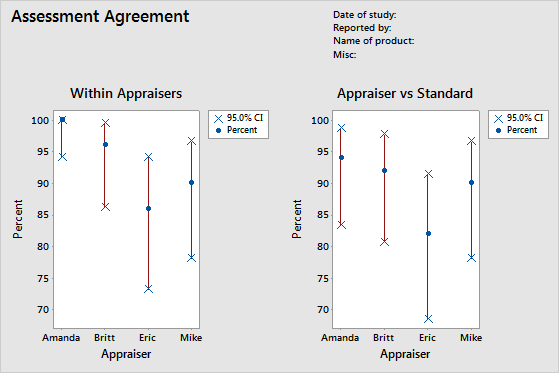

Rater Agreement in SAS using the Weighted Kappa and Intra-Cluster Correlation | by Dr. Marc Jacobs | Medium

![PDF] Interrater reliability: the kappa statistic | Semantic Scholar PDF] Interrater reliability: the kappa statistic | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/bf3a7271860b1667e3ceb84e5bc400d2635ff8b7/7-Figure4-1.png)

![Fleiss' Kappa and Inter rater agreement interpretation [24] | Download Table Fleiss' Kappa and Inter rater agreement interpretation [24] | Download Table](https://www.researchgate.net/profile/Vijay-Sarthy-Sreedhara/publication/281652142/figure/tbl3/AS:613853020819479@1523365373663/Fleiss-Kappa-and-Inter-rater-agreement-interpretation-24.png)

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/2-Table1-1.png)